diff --git a/cloud-accounts/changing-instance-types.mdx b/cloud-accounts/changing-instance-types.mdx

deleted file mode 100644

index 61d8c91..0000000

--- a/cloud-accounts/changing-instance-types.mdx

+++ /dev/null

@@ -1,80 +0,0 @@

----

-title: "Changing Instance Types"

-sidebarTitle: "Changing Instance Types"

----

-

-## Prerequisites

-

-If you have not yet provisioned a cluster on one of the cloud providers, check out one of the links below to get started

-

-

-

-

-

-

-

-## Select an instance type

-

-1. Select the `Infrastructure` section on the left side of the dashboard

-2. Select the `Overview` tab

-

-

-3. Expand the `Default node group` dropdown

-

-

- The following settings should be displayed:

-

- - **Machine type** - The underlying instance of the nodes that your applications are running on. The options here will vary per cloud provider.

- - **Maximum number of nodes** - The maximum number of nodes that your cluster will autoscale to under high load.

- - **Minimum number of nodes** - The minimum number of nodes that your cluster will autoscale to under low load.

- - **Disk size** - The storage capacity of the disk attached to each node in the cluster.

-

-4. Select the appropriate **Machine type** from the dropdown, then scroll to the bottom of the page, and click `Update`.

-

-

-- For more information on each cloud provider and their specific instance types, see our [FAQ at the bottom of this page](/cloud-accounts/changing-instance-types#how-much-will-my-instance-type-cost)

-

-## FAQ

-

-### Why does my cloud provider offer an instance that is not available on Porter?

-

-If you have an instance type that you would like to use in mind, it may not be available in the same region as your cluster.

-This happens frequently as each cloud provider releases new instances on a phased basis, region by region.

-

-Porter extensively tests the compatibility of instance types across a wide range of cluster and application configurations.

-While the instance types that are available on Porter suit the most common needs of our users, we will be happy to add requested instance types for any cloud provider.

-Message us from the dashboard through the chat bot.

-

-### How much will my instance type cost?

-

-The specific instance types that are available for each cloud provider, along with their pricing can be found on their docs at the links below.

-

-

-

-

-

-

diff --git a/cloud-accounts/cluster-observability.mdx b/cloud-accounts/cluster-observability.mdx

new file mode 100644

index 0000000..1e77a91

--- /dev/null

+++ b/cloud-accounts/cluster-observability.mdx

@@ -0,0 +1,82 @@

+---

+title: "Cluster Observability"

+sidebarTitle: "Cluster Observability"

+description: "Monitor cluster health, resource usage, and infrastructure metrics"

+---

+

+Porter provides built-in observability for your cluster infrastructure through the **Infrastructure** dashboard. Access it by clicking **Infrastructure** in the left sidebar.

+

+---

+

+## Pods

+

+The **Pods** tab provides a real-time view of all pods running in your cluster.

+

+- **Search**: Filter pods by name

+- **Filters**: Filter by status or namespace

+

+Each pod displays:

+

+| Column | Description |

+|--------|-------------|

+| **Pod name** | The name of the pod |

+| **Namespace** | Kubernetes namespace (e.g., `kube-system`, `default`) |

+| **Status** | Current state (Running, Pending, Failed, etc.) |

+| **Ready** | Container readiness (e.g., `1/1`) |

+| **Restarts** | Number of container restarts |

+| **CPU** | CPU usage |

+| **Memory** | Memory usage |

+| **Memory %** | Percentage of memory limit used |

+| **Age** | Time since pod creation |

+

+---

+

+## Nodes

+

+The **Nodes** tab shows your cluster's node groups and individual nodes.

+

+### Node Groups View

+

+The default view displays all node groups:

+

+| Column | Description |

+|--------|-------------|

+| **Node group** | Name of the node group (e.g., default, monitoring, system) |

+| **Instance type** | The machine type for nodes in this group |

+| **Utilization** | Visual indicator of resource usage |

+| **Actions** | Link to view detailed metrics |

+

+### Individual Nodes View

+

+Click on a node group to see individual nodes:

+

+- **Node name**: The cloud provider's node identifier

+- **Node group**: Which node group this node belongs to

+- **Instance type**: The machine type

+- **CPU**: CPU utilization shown as utilized (yellow) vs reserved (blue)

+- **Memory**: Memory utilization shown as utilized (yellow) vs reserved (blue)

+- **Status**: Node health status (Ready, NotReady)

+

+Click **Metrics >** on any node group to view historical instance counts over time.

+

+---

+

+## Integrating External Monitoring

+

+For application-level monitoring and alerting, integrate with external observability platforms:

+

+

+

+ Full-stack monitoring with APM, logs, and infrastructure metrics

+

+

+ Application performance monitoring and alerting

+

+

+ Dashboards and visualization for metrics and logs

+

+

+

+See [Third party observability](/addons/third-party-observability) or reach out to support for more information.

+

+

diff --git a/cloud-accounts/cluster-upgrades.mdx b/cloud-accounts/cluster-upgrades.mdx

index 9dd32d4..7cd06a4 100644

--- a/cloud-accounts/cluster-upgrades.mdx

+++ b/cloud-accounts/cluster-upgrades.mdx

@@ -1,10 +1,12 @@

---

title: "Cluster Upgrades"

+sidebarTitle: "Cluster Upgrades"

+description: "How Porter manages Kubernetes upgrades for your cluster"

---

Keeping your Kubernetes clusters up-to-date is essential for ensuring security, stability, and access to the latest features built by the wider Kubernetes community as well as the underlying public cloud. To that end, we take care of managed Kubernetes upgrades for all clusters provisioned through our platform. Our automated upgrade process ensures your clusters remain current without disrupting your workloads, so you can focus on building and deploying your applications while we handle the complexities of cluster maintenance.

-# Shared Responsibility Model

+## Shared Responsibility Model

We've endeavoured to build a world-class cluster management system which is able to manage and upgrade customer infrastructure without causing disruption to customer workloads. To that end, we've defined a shared responsibility model which maps out the roles played by Porter's engineering/SRE teams as well as customers to ensure the best possible experience with upgrades.

@@ -30,11 +32,11 @@ More documentation around zero-downtime deployments may be found [here](/configu

3. Maintaining a constant stream of communication around upgrade timelines and statuses.

-# Upgrade Calendar

+## Upgrade Calendar

Kubernetes follows a release cycle where there are - approximately - three minor version releases a year. Every release is followed by a period where public clouds integrate the new version into their managed Kubernetes offerings and run tests to ensure compatibility with the underlying cloud. Our upgrade calendar is thus dependent on both release cycles. To account for that, we carry out cluster upgrades twice a year, where we "leapfrog" over versions to ensure customer clusters are running the _latest stable_ version of Kubernetes. These are typically carried out once towards the end of Q1/beginning of Q2 and then later towards the end of Q3.

-# Upgrade Path

+## Upgrade Path

When a new version of upstream Kubernetes is released, we closely track the corresponding release on public clouds in conjunction with the wider community as well as our public cloud partners (AWS, Google Cloud, Azure).

@@ -50,4 +52,5 @@ When a new version of upstream Kubernetes is released, we closely track the corr

3. After our tests are successful, we announce a timeline for upgrades over our comms channels on Slack. At this point, while we typically announce a window during low-traffic hours when upgrades are conducted, customers have the option of scheduling a specific slot.

-4. When a cluster is upgraded, we upgrade system components, all app templates, the managed cluster control plane as well as all nodegroups. While this operation is meant to be non-disruptive, there are certain prerequisites on the customers' end to ensure zero downtime (see the section below for more details).

\ No newline at end of file

+4. When a cluster is upgraded, we upgrade system components, all app templates, the managed cluster control plane as well as all nodegroups. While this operation is meant to be non-disruptive, there are certain prerequisites on the customers' end to ensure zero downtime (see the section below for more details).

+

diff --git a/cloud-accounts/connecting-a-cloud-account.mdx b/cloud-accounts/connecting-a-cloud-account.mdx

new file mode 100644

index 0000000..97d8d50

--- /dev/null

+++ b/cloud-accounts/connecting-a-cloud-account.mdx

@@ -0,0 +1,297 @@

+---

+title: "Connecting a Cloud Account"

+sidebarTitle: "Connecting a Cloud Account"

+description: "Grant Porter access to provision infrastructure in your cloud provider"

+---

+

+Before Porter can create a cluster, you need to grant it access to your cloud account. Porter uses secure credential methods that don't require storing static API keys.

+

+

+

+ Porter uses AWS IAM role assumption via the `AssumeRole` [operation](https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use.html) to access your account. You create a role in your AWS account and declare that you trust Porter to assume it. This eliminates static credentials and makes access easy to revoke.

+

+ ## Create the IAM Role

+

+

+

+ After selecting AWS as your cloud provider, log into your [AWS Console](https://console.aws.amazon.com) and find your 12-digit Account ID in the top-right corner.

+

+ Enter this ID in Porter and click **Grant Permissions**.

+

+

+ Porter opens the AWS CloudFormation console in a new tab to create a stack that provisions the `porter-manager` IAM role.

+

+

+ If the popup is blocked, check your browser settings and allow popups from Porter.

+

+

+ Scroll to the bottom of the CloudFormation page, check the **I acknowledge that AWS CloudFormation might create IAM resources** box, and click **Create Stack**.

+

+ Wait for the stack creation to complete (this takes a few minutes).

+

+

+

+

+ The IAM role must remain in your AWS account for Porter to manage your infrastructure. Deleting it will prevent Porter from making changes.

+

+

+ ## Permissions Granted

+

+ The CloudFormation stack creates an IAM role with permissions to:

+

+ - Create and manage EKS clusters

+ - Create and manage VPCs, subnets, and security groups

+ - Create and manage ECR repositories

+ - Create and manage IAM roles for cluster operations

+ - Request service quota increases

+

+

+ If you need Porter to operate with more restricted permissions, contact us through the support widget to inquire about Porter Enterprise.

+

+

+ ## Revoking Access

+

+ To revoke Porter's access:

+

+ 1. First, delete any clusters through the Porter dashboard

+ 2. Navigate to **CloudFormation Stacks** in your AWS console

+ 3. Select the stack named `PorterRole` and click **Delete**

+

+ This removes the IAM role and prevents Porter from accessing your account.

+

+

+

+ Porter connects to GCP using a service account with permissions to manage your infrastructure.

+

+ ## Prerequisites

+

+

+

+ Before creating the service account, enable the following APIs in your [GCP Console](https://console.cloud.google.com):

+

+ 1. Navigate to **APIs & Services**

+ 2. Click **Enable APIs and Services**

+ 3. Search for and enable each of these APIs:

+ - Compute Engine API

+ - Kubernetes Engine API

+ - Cloud Resource Manager API

+ - Container Registry API

+ - Artifact Registry API

+

+ Each API may take a few minutes to enable. Confirm all five are enabled before proceeding.

+

+

+

+ ## Create the Service Account

+

+

+

+ In the [GCP Console](https://console.cloud.google.com), go to **IAM & Admin** → **Service Accounts**.

+

+

+ Click **Create Service Account** and enter a name (e.g., `porter-service-account`).

+

+

+ Grant the service account these five roles:

+

+ - **Cloud Storage > Storage Admin**

+ - **Compute Engine > Compute Admin**

+ - **Kubernetes Engine > Kubernetes Engine Admin**

+ - **Service Accounts > Service Account User**

+ - **Artifact Registry > Artifact Registry Administrator**

+

+ Click **Done** to create the account.

+

+

+ 1. Find your new service account in the list

+ 2. Under **Actions**, select **Manage keys**

+ 3. Click **Add Key** → **Create new key**

+ 4. Select **JSON** as the key type

+ 5. The JSON key file downloads automatically—keep it safe

+

+

+ In Porter, click **Drop a GCP Service Account JSON here, or click to browse** and upload the JSON key file.

+

+ Porter verifies the credentials and permissions (this takes about a minute).

+

+

+

+ ## Revoking Access

+

+ To revoke Porter's access:

+

+ 1. First, delete any clusters through the Porter dashboard

+ 2. Navigate to **IAM & Admin** → **Service Accounts** in GCP Console

+ 3. Find the Porter service account and delete it

+

+ This removes the service account and prevents Porter from accessing your account.

+

+

+

+ Porter connects to Azure using a service principal with permissions to manage your infrastructure.

+

+ ## Create the Service Principal

+

+ You can create the service principal using our automated script (recommended) or manually.

+

+

+

+ If you have the Azure CLI installed and authenticated (`az login`), run our setup script:

+

+ ```bash

+ # Download the setup script

+ curl -O https://raw.githubusercontent.com/porter-dev/docs/main/scripts/setup-azure-porter.sh

+

+ # Make it executable

+ chmod +x setup-azure-porter.sh

+

+ # Run the script (optionally provide subscription ID)

+ ./setup-azure-porter.sh [your-subscription-id]

+ ```

+

+ The script:

+ - Enables all required Azure resource providers

+ - Creates the custom `porter-aks-restricted` role

+ - Creates the service principal with proper permissions

+ - Adds Microsoft Graph API permissions

+ - Grants admin consent (if you have permissions)

+ - Displays the credentials needed for Porter

+

+

+ If the script fails to grant admin consent automatically, grant it manually in the Azure Portal: **App registrations** > **azure-porter-restricted-sp** > **API permissions** > **Grant admin consent for Default Directory**.

+

+

+

+

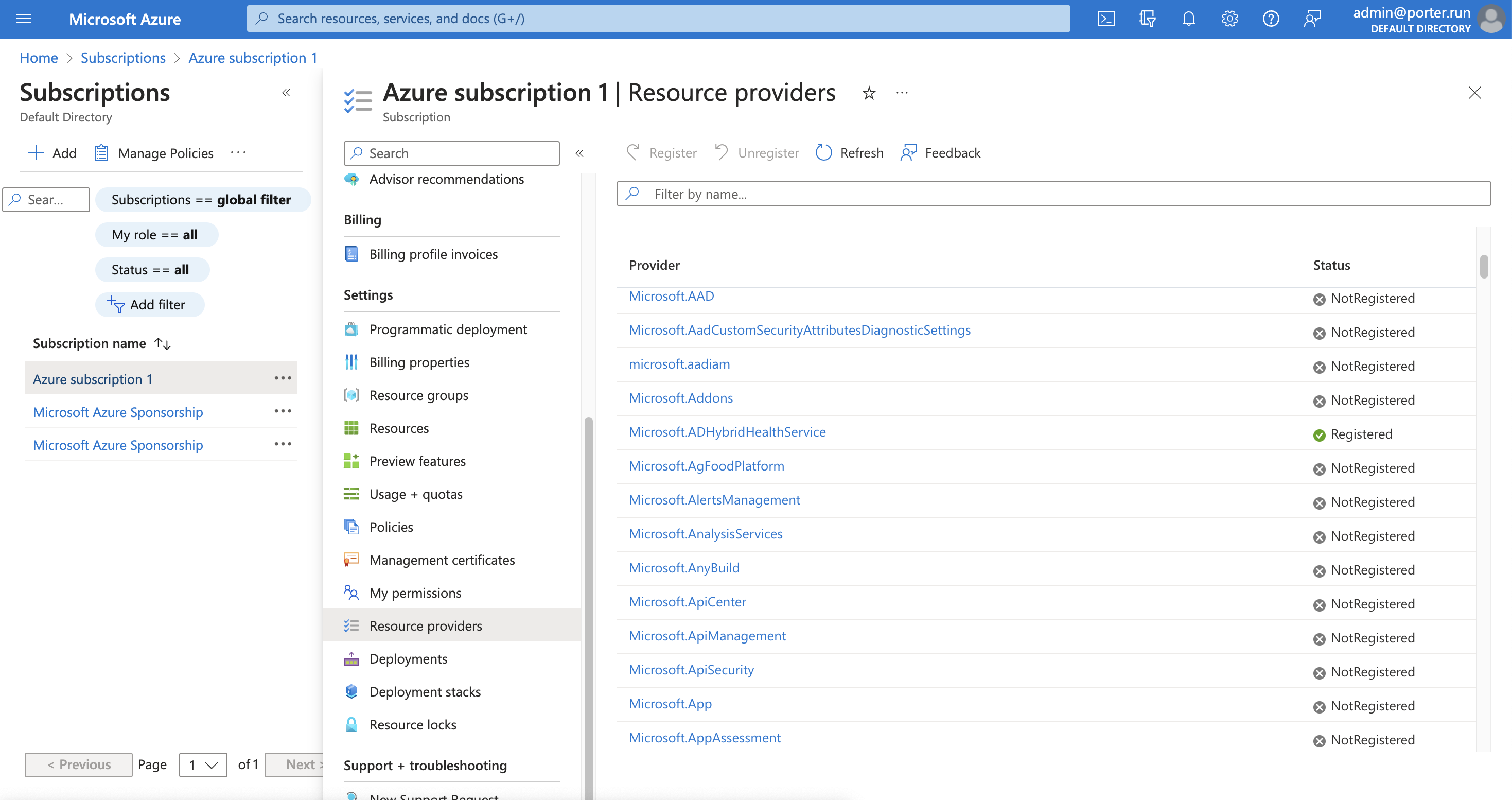

+ ### Enable Resource Providers

+

+ Before creating the service principal, enable the required resource providers:

+

+ 1. In the Azure portal, search for **Subscriptions**

+ 2. Select your subscription and click **Resource providers**

+ 3. Enable the following providers by selecting them and clicking **Register**:

+ - Microsoft.Capacity

+ - Microsoft.Compute

+ - Microsoft.ContainerRegistry

+ - Microsoft.ContainerService

+ - Microsoft.ManagedIdentity

+ - Microsoft.Network

+ - Microsoft.OperationalInsights

+ - Microsoft.OperationsManagement

+ - Microsoft.ResourceGraph

+ - Microsoft.Resources

+ - Microsoft.Storage

+

+ Registration may take a few minutes per provider. Confirm all providers are enabled before proceeding.

+

+ ### Create the Custom Role

+

+ Run the following commands in the Azure Cloud Shell (Bash) or your local terminal with the Azure CLI:

+

+ ```bash

+ # Set your subscription ID

+ PORTER_AZURE_SUBSCRIPTION_ID=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

+

+ # Create the role

+ envsubst << EOF | az role definition create --role-definition @-

+ {

+ "assignableScopes": ["/subscriptions/${PORTER_AZURE_SUBSCRIPTION_ID}"],

+ "description": "Grants Porter access to manage resources for an AKS cluster.",

+ "id": "/subscriptions/${PORTER_AZURE_SUBSCRIPTION_ID}/providers/Microsoft.Authorization/roleDefinitions/porter-aks-restricted",

+ "isCustom": true,

+ "name": "porter-aks-restricted",

+ "permissions": [

+ {

+ "actions": ["*"],

+ "dataActions": [],

+ "notActions": [

+ "Microsoft.Authorization/elevateAccess/Action",

+ "Microsoft.Blueprint/blueprintAssignments/write",

+ "Microsoft.Blueprint/blueprintAssignments/delete",

+ "Microsoft.Compute/galleries/share/action"

+ ],

+ "notDataActions": []

+ }

+ ],

+ "roleName": "Contributor",

+ "roleType": "BuiltInRole",

+ "type": "Microsoft.Authorization/roleDefinitions"

+ }

+ EOF

+ ```

+

+ ### Create the Service Principal

+

+ ```bash

+ az ad sp create-for-rbac \

+ --name="azure-porter-restricted-sp" \

+ --role="porter-aks-restricted" \

+ --scopes="/subscriptions/${PORTER_AZURE_SUBSCRIPTION_ID}"

+ ```

+

+ Save the output—you'll need these values:

+

+ ```json

+ {

+ "appId": "00000000-0000-0000-0000-000000000000",

+ "displayName": "azure-porter-restricted-sp",

+ "password": "0000-0000-0000-0000-000000000000",

+ "tenant": "00000000-0000-0000-0000-000000000000"

+ }

+ ```

+

+ ### Grant API Permissions

+

+ 1. In the Azure portal, search for **App registrations**

+ 2. Under **All applications**, select your new service principal

+ 3. Navigate to **API Permissions**

+ 4. Click **Add a permission** → **Microsoft Graph** → **Application permissions**

+ 5. Select these permissions:

+ - Application.ReadWrite.All

+ - Directory.ReadWrite.All

+ - Domain.Read.All

+ - Group.Create

+ - Group.ReadWrite.All

+ - RoleManagement.ReadWrite.Directory

+ - User.ReadWrite.All

+ 6. Click **Add permissions**

+ 7. Click **Grant admin consent for Default Directory**

+

+

+

+ ## Enter Credentials in Porter

+

+ In Porter, enter the following values from your service principal:

+

+ | Field | Value |

+ |-------|-------|

+ | **Subscription ID** | Your Azure subscription ID |

+ | **Application (Client) ID** | The `appId` from your service principal |

+ | **Client Secret** | The `password` from your service principal |

+ | **Tenant ID** | The `tenant` from your service principal |

+

+ ## Rotating Credentials

+

+ Azure requires client secrets to expire every 365 days. When a secret expires, Porter can't manage infrastructure or deploy updates (existing workloads continue running).

+

+ To refresh your client secret:

+

+ 1. Visit [https://aka.ms/NewClientSecret](https://aka.ms/NewClientSecret)

+ 2. Select the app ID for your Porter service principal

+ 3. Generate a new client secret and copy the value

+ 4. In Porter, navigate to **Integrations** → **Azure**

+ 5. Update the **Password** field with the new value

+

+ ## Revoking Access

+

+ To revoke Porter's access:

+

+ 1. First, delete any clusters through the Porter dashboard

+ 2. In the Azure portal, search for **App registrations**

+ 3. Find and delete the Porter service principal

+ 4. Optionally, delete the custom role definition

+

+ This removes the service principal and prevents Porter from accessing your account.

+

+

+

diff --git a/cloud-accounts/creating-a-cluster.mdx b/cloud-accounts/creating-a-cluster.mdx

new file mode 100644

index 0000000..d819754

--- /dev/null

+++ b/cloud-accounts/creating-a-cluster.mdx

@@ -0,0 +1,264 @@

+---

+title: "Creating a Cluster"

+sidebarTitle: "Creating a Cluster"

+description: "Provision a cluster in your cloud account"

+---

+

+After [connecting your cloud account](/cloud-accounts/connecting-a-cloud-account), you can create a cluster. Porter handles all the complexity of cluster provisioning, including networking, load balancers, and node groups.

+

+## Provisioning Your Cluster

+

+

+

+

+

+ Porter displays estimated monthly costs for your infrastructure (~$225/month for AWS).

+

+ These estimates are for the default cluster configuration. Actual costs vary based on usage, region, and customizations.

+

+ Review the cost breakdown and click **Accept** to continue.

+

+

+ Porter pre-configures your cluster with sensible defaults:

+

+ - **Cluster name**: Auto-generated based on your project

+ - **Region**: Defaults to `us-east-1`

+ - **Node groups**: EKS clusters are initialially provisioning with cost-optimal node groups. You can edit your node group configuration after provisioning is complete

+

+

+ For guidance on choosing a region: if you have an external database, choose that region or a region as close as possible. Otherwise, choose a region near your primary customer base.

+

+

+ You can customize these settings or accept the defaults.

+

+

+ If AWS is limiting your account's resource quota, Porter displays a warning and offers to auto-request quota increases on your behalf.

+

+ **Allow Porter to auto-request AWS quota** is enabled by default. This is the recommended approach.

+

+ Alternatively, you can manually request quota increases through the [AWS Service Quotas console](https://console.aws.amazon.com/servicequotas/). If you go the manual route, you won't be able to provision until the quota increase requests are approved.

+

+

+ Click **Provision** to start creating your infrastructure.

+

+

+ Provisioning takes approximately 30-45 minutes. You can close the browser and return later — Porter continues working in the background.

+

+

+

+

+ ### Troubleshooting

+

+

+

+ If your cluster has been provisioning for more than 45 minutes, there may be an issue:

+

+ - Verify that the IAM role still exists in your AWS account

+ - Check your AWS Service Quotas to ensure they were approved

+ - Verify that the selected region supports the requested instance types

+

+ If issues persist, contact us through the dashboard chat bot with your project ID.

+

+

+ If you encounter permission errors:

+

+ - Verify the CloudFormation stack created successfully and the role exists

+ - Ensure the role has not been modified after creation

+ - Check that your AWS account has not applied SCPs (Service Control Policies) that restrict Porter's actions

+

+

+ AWS typically approves quota increases automatically, but some may require manual review:

+

+ - Check the status of quota requests in the [AWS Service Quotas console](https://console.aws.amazon.com/servicequotas/)

+ - Requests under manual review typically take 24-48 hours

+ - If urgent, contact AWS support to expedite the review

+

+

+ If the CloudFormation stack fails to create:

+

+ - Ensure you have sufficient permissions in your AWS account to create IAM roles

+ - Check that you're logged into the correct AWS account (the account ID should match)

+ - Verify your account is in good standing and billing is enabled

+

+

+

+

+

+

+

+ Porter displays estimated monthly costs for your infrastructure (~$253/month for GCP).

+

+ These estimates are for the default cluster configuration. Actual costs vary based on usage, region, and customizations.

+

+ Review the cost breakdown and click **Accept** to continue.

+

+

+ Before provisioning, Porter verifies your GCP project is properly configured. If any checks fail, troubleshooting steps are shown on the dashboard.

+

+ Common checks include:

+ - Required APIs are enabled

+ - Service account has necessary permissions

+ - Project has billing enabled

+

+

+ Porter pre-configures your cluster with sensible defaults:

+

+ - **Cluster name**: Auto-generated based on your project

+ - **Region**: Defaults to `us-east1`

+ - **Node groups**: Pre-configured with appropriate instance types

+

+

+ For guidance on choosing a region: if you have an external database, choose a region close to it. Otherwise, choose a region near your primary customer base.

+

+

+ You can customize these settings or accept the defaults.

+

+

+ Click **Provision** to start creating your infrastructure.

+

+

+ Provisioning takes approximately 30-45 minutes. You can close the browser and return later—Porter continues working in the background.

+

+

+

+

+ ### Troubleshooting

+

+

+

+ If your cluster has been provisioning for more than 45 minutes:

+

+ - Verify the service account still exists and has the required permissions

+ - Check that all required APIs are still enabled

+ - Verify the GCP project has billing enabled

+

+ If issues persist, contact us through the dashboard chat bot with your project ID.

+

+

+ If you see errors about APIs not being enabled:

+

+ 1. Navigate to **APIs & Services** in the GCP Console

+ 2. Verify all five required APIs are enabled:

+ - Compute Engine API

+ - Kubernetes Engine API

+ - Cloud Resource Manager API

+ - Container Registry API

+ - Artifact Registry API

+ 3. Return to Porter and retry provisioning

+

+

+ If you encounter permission errors:

+

+ - Verify the service account has all five required roles

+ - Check that the JSON key file matches the service account

+ - Ensure the service account hasn't been modified since creation

+

+

+

+

+

+

+

+ Porter displays estimated monthly costs for your infrastructure (~$165/month for Azure).

+

+ These estimates are for the default cluster configuration. Actual costs vary based on usage, region, and customizations.

+

+ Review the cost breakdown and click **Accept** to continue.

+

+

+ By default, Azure limits the types of resources you can provision. Before provisioning, you may need to request quota increases.

+

+ In the Azure portal:

+ 1. Navigate to your subscription

+ 2. Select **Usage + quotas**

+ 3. Set the resource filter to **Compute** and region to your desired region

+

+ Request increases for:

+

+ | Resource Family | Recommended Quota |

+ |-----------------|-------------------|

+ | Total Regional vCPUs | 40 |

+ | Standard Basv2 Family vCPUs | 40 |

+

+ Click **Request quota increase** for each resource. Requests are typically approved automatically within a few minutes. If not, fill out the support ticket as prompted.

+

+

+ Porter pre-configures your cluster with sensible defaults:

+

+ - **Cluster name**: Auto-generated based on your project

+ - **Region**: Defaults to `eastus`

+ - **Azure tier**: Free tier for non-production, Standard tier for production

+ - **Node groups**: Pre-configured with appropriate instance types

+

+

+ For guidance on choosing a region: if you have an external database, choose a region close to it. Otherwise, choose a region near your primary customer base.

+

+

+ You can customize these settings or accept the defaults. The Azure tier can be changed after cluster creation.

+

+

+ Click **Provision** to start creating your infrastructure.

+

+

+ Provisioning takes approximately 30-45 minutes. You can close the browser and return later—Porter continues working in the background.

+

+

+

+

+ ### Troubleshooting

+

+

+

+ If your cluster has been provisioning for more than 45 minutes:

+

+ - Verify the service principal still exists and has the required permissions

+ - Check that all resource providers are registered

+ - Verify your compute quota requests were approved

+

+ If issues persist, contact us through the dashboard chat bot with your project ID.

+

+

+ If you see errors about insufficient quota:

+

+ 1. Navigate to **Usage + quotas** in your Azure subscription

+ 2. Check the status of your quota requests

+ 3. If pending, wait for approval or contact Azure support

+ 4. Once approved, retry provisioning in Porter

+

+

+ If you see errors about resource providers:

+

+ 1. Navigate to your subscription's **Resource providers** page

+ 2. Find the mentioned provider and click **Register**

+ 3. Wait for registration to complete

+ 4. Retry provisioning in Porter

+

+

+ If you encounter permission errors:

+

+ - Verify the service principal has the `porter-aks-restricted` role

+ - Check that admin consent was granted for Microsoft Graph permissions

+ - Ensure the client secret hasn't expired

+

+

+

+

+

+---

+

+## After Provisioning

+

+Once your cluster is ready, you'll see the Porter dashboard. From here you can:

+

+

+

+ Deploy an application from GitHub or a container registry.

+

+

+ Customize instance types and scaling settings.

+

+

+ Monitor cluster health and resource usage.

+

+

+

diff --git a/cloud-accounts/deleting-a-cluster.mdx b/cloud-accounts/deleting-a-cluster.mdx

new file mode 100644

index 0000000..346b961

--- /dev/null

+++ b/cloud-accounts/deleting-a-cluster.mdx

@@ -0,0 +1,156 @@

+---

+title: "Deleting a Cluster"

+sidebarTitle: "Deleting a Cluster"

+description: "Remove a Porter-provisioned cluster and clean up cloud resources"

+---

+

+

+Deleting a cluster removes all applications and data running on it. This action cannot be undone.

+

+

+## Before You Delete

+

+Before deleting your cluster:

+

+1. **Back up any data** stored in your applications or persistent volumes

+2. **Export environment variables** and secrets if you need them for future deployments

+3. **Note your configuration** if you plan to recreate the cluster later

+

+---

+

+## Delete the Cluster

+

+

+

+

+ Porter needs the IAM role to delete resources. Delete the cluster first, then delete the IAM role. If you've already deleted the IAM role, you'll need to [delete resources directly from the AWS console](/other/deleting-dangling-resources).

+

+

+

+

+ 1. Navigate to the **Infrastructure** tab in the Porter dashboard

+ 2. Click **Delete Cluster**

+ 3. Confirm the deletion

+

+ This process may take up to 30 minutes.

+

+

+ After the cluster is deleted:

+

+ 1. Navigate to **CloudFormation Stacks** in your AWS console

+ 2. Select the stack named `PorterRole`

+ 3. Click **Delete**

+

+ This revokes Porter's access to your AWS account.

+

+

+ Check your AWS console to verify all resources have been removed:

+

+ - **EC2**: No instances, load balancers, or security groups related to the cluster

+ - **EKS**: No clusters remaining

+ - **VPC**: No VPCs created by Porter

+ - **ECR**: Container images may remain (delete manually if not needed)

+

+

+

+

+ Deleting resources via Porter may result in dangling resources. See [Deleting Dangling Resources](/other/deleting-dangling-resources) for cleanup guidance.

+

+

+

+

+

+

+ 1. Navigate to the **Infrastructure** tab in the Porter dashboard

+ 2. Click **Additional Settings**

+ 3. Click **Delete Cluster**

+ 4. Confirm the deletion

+

+ This process may take up to 30 minutes.

+

+

+ Check your GCP console to verify all resources have been removed:

+

+ - **Compute Engine**: No instances or load balancers related to the cluster

+ - **Kubernetes Engine**: No clusters remaining

+ - **VPC networks**: No networks created by Porter

+ - **Artifact Registry**: Container images may remain (delete manually if not needed)

+

+

+ If you no longer need Porter to access your GCP project:

+

+ 1. Navigate to **IAM & Admin** → **Service Accounts**

+ 2. Find and delete the Porter service account

+

+

+

+

+ Deleting resources via Porter may result in dangling resources. You can remove dangling resources via the GCP console or the gcloud CLI.

+

+

+

+

+

+

+ 1. Navigate to the **Infrastructure** tab in the Porter dashboard

+ 2. Click **Additional Settings**

+ 3. Click **Delete Cluster**

+ 4. Confirm the deletion

+

+ This process may take up to 30 minutes.

+

+

+ After deletion, verify in the Azure portal:

+

+ 1. Search for **Resource groups**

+ 2. A resource group named `-` may remain, containing your build images

+ 3. Porter does not delete build images by default—delete this resource group manually if needed

+ 4. Delete any other remaining resource groups created by Porter

+

+

+ If you no longer need Porter to access your Azure subscription:

+

+ 1. Search for **App registrations** in the Azure portal

+ 2. Find and delete the Porter service principal

+ 3. Optionally, delete the custom `porter-aks-restricted` role definition

+

+

+

+

+ Deleting resources via Porter may result in dangling resources. Check your Azure portal to verify all resources have been removed.

+

+

+

+

+---

+

+## Troubleshooting Deletion

+

+

+

+ If deletion takes more than 45 minutes:

+

+ 1. Check your cloud provider's console for any resources in a "deleting" state

+ 2. Look for dependencies that may be blocking deletion (e.g., load balancers with active connections)

+ 3. Contact us through the dashboard chat bot with your project ID

+

+

+ If resources remain in your cloud account after Porter reports deletion complete:

+

+ 1. Follow the [Deleting Dangling Resources](/other/deleting-dangling-resources) guide

+ 2. Check for resources in different regions than expected

+ 3. Look for resources with names containing your project ID

+

+

+ If Porter can't delete because credentials have expired:

+

+ **AWS**: Re-create the CloudFormation stack to restore the IAM role, then delete the cluster

+

+ **GCP**: Upload a new service account JSON key in **Integrations** → **GCP**, then delete the cluster

+

+ **Azure**: Generate a new client secret and update it in **Integrations** → **Azure**, then delete the cluster

+

+ If you can't restore credentials, you'll need to [delete resources manually](/other/deleting-dangling-resources).

+

+

+

diff --git a/cloud-accounts/node-groups.mdx b/cloud-accounts/node-groups.mdx

index ed281fb..58fc365 100644

--- a/cloud-accounts/node-groups.mdx

+++ b/cloud-accounts/node-groups.mdx

@@ -1,81 +1,138 @@

---

title: "Node Groups"

-description: "Configure node groups and optimize compute costs for your Porter cluster"

+sidebarTitle: "Node Groups"

+description: "Configure node groups, instance types, and optimize compute costs"

---

-Porter provides flexible options for managing compute resources in your cluster.

-You can add custom node groups for specialized workloads or enable cost

-optimization to reduce infrastructure spend.

+Node groups are collections of compute instances that run your workloads. Porter provides flexible options for managing compute resources, including custom node groups for specialized workloads and cost optimization features.

-## Creating a Custom Node Group

+## Default Node Groups

+

+Porter provisions three node groups by default:

+

+| Node Group | Purpose | Autoscaling |

+|------------|---------|-------------|

+| **System** | Kubernetes system workloads | Fixed |

+| **Monitoring** | Observability stack (metrics, logs) | Fixed |

+| **Application** | Your application workloads | Enabled (1 node minimum) |

+

+The application node group autoscales based on demand. You can customize instance types, node counts, and scaling limits for all node groups.

+

+---

+

+## Changing Instance Types

+

+You can modify the instance type for any node group from the Infrastructure settings.

From your Porter dashboard, click on the **Infrastructure** tab in the left sidebar.

+

+ Click on **Overview** to view your cluster configuration and node groups.

+

+

+ Expand the node group dropdown (e.g., **Default node group**) to see the following settings:

+

+ | Setting | Description |

+ |---------|-------------|

+ | **Machine type** | The underlying instance for nodes. Options vary by cloud provider. |

+ | **Maximum nodes** | Upper limit for autoscaling under high load |

+ | **Minimum nodes** | Lower limit for autoscaling under low load |

+ | **Disk size** | Storage capacity for each node (default: 50GB) |

+

+

+ Select the appropriate **Machine type** from the dropdown, then scroll to the bottom and click **Update**.

+

+

+

+### Instance Type Pricing

+

+

+

+

+

+

+

+### Why is my instance type not available?

+

+Instance types may not be available if:

+

+- The instance is not available in your cluster's region (cloud providers release new instances on a phased, region-by-region basis)

+- The instance type hasn't been validated by Porter for compatibility

+

+If you need a specific instance type that isn't listed, contact us through the dashboard chat bot.

+

+---

+

+## Creating a Custom Node Group

+

+Create custom node groups for specialized workloads like GPU processing, high-memory applications, or isolated environments.

+

+

+ From your Porter dashboard, click on the **Infrastructure** tab in the left sidebar.

+

Click on **Cluster** to view your cluster configuration and node groups.

-

- Click **Add an additional node group** to open the node group configuration panel.

+ Click **Add an additional node group** to open the configuration panel.

-

+ Choose between two configuration approaches:

+

- ## Cost Optimization for Node Groups

-

- Set your maximum CPU cores limit to prevent unexpected scaling. This helps prevent unexpected cost increases by setting a cap.

-

-

+ Cost optimization automatically selects the most cost-effective instance types for your workloads. Any cluster provisioned in AWS will default to cost optimized node groups.

+ Set your **maximum CPU cores limit** to prevent unexpected scaling. This caps infrastructure costs while allowing flexibility in instance selection.

### Limitations

- The following node group configurations should continue using fixed instance types until we support cost optimization for them:

+ The following configurations should use fixed instance types instead:

- GPU instances (e.g., instances with NVIDIA GPUs)

- Spot instances

- Instances in public subnets

- Instances with specialized hardware requirements

-

- ## Fixed Node Groups

-

- Fixed node groups uses a specific fixed instance type approach. Applications built on this will only be scheduled on the exact instance type. This gives you more control, but has the limitation of over-provisioning certain resources if configured incorrectly.

+

+ Fixed node groups use a specific instance type. Applications scheduled on this group run only on the exact instance type you specify.

-

+ This gives you precise control but may result in over-provisioning if configured incorrectly.

- Configure your node group with the following settings:

+ Configure:

| Setting | Description |

|---------|-------------|

| **Instance type** | The machine type for nodes in this group |

- | **Minimum nodes** | The minimum number of nodes to maintain (set to 0 for scale-to-zero) |

- | **Maximum nodes** | The upper limit for autoscaling |

+ | **Minimum nodes** | Minimum number of nodes (set to 0 for scale-to-zero) |

+ | **Maximum nodes** | Upper limit for autoscaling |

- Choose instance types based on your workload requirements. For GPU workloads, create a second node group and select instances with GPU support (e.g., `g4dn.xlarge` on AWS, `Standard_NC4as_T4_v3` on Azure, `g2-standard-4` on GCP).

+ For GPU workloads, select instances with GPU support:

+ - **AWS**: `g4dn.xlarge`, `p3.2xlarge`

+ - **Azure**: `Standard_NC4as_T4_v3`

+ - **GCP**: `g2-standard-4`

-

- **Health Checks Required**: For production applications, ensure proper health checks are configured before scheduling them on cost-optimized node groups. This ensures your applications can be safely rescheduled on new nodes without causing any disruption as nodes are reshuffled.

+ **Health Checks Required**: For production applications on cost-optimized node groups, configure proper [health checks](/configure/health-checks). This ensures applications can be safely rescheduled as nodes are reshuffled.

-

- Click **Save** to create the node group. Porter will provision the new nodes in your cluster. This may take a few minutes.

+ Click **Save** to create the node group. Porter provisions the new nodes, which may take a few minutes.

-## Assigning Workloads

+---

+

+## Assigning Workloads to Node Groups

-Once your custom node group is created, you can assign applications to run on it:

+Once your custom node group is created, assign applications to run on it:

1. Navigate to your application in the Porter dashboard

2. Go to the **Services** tab

@@ -84,15 +141,17 @@ Once your custom node group is created, you can assign applications to run on it

5. Select your custom node group from the dropdown

6. Save and redeploy your application

+---

+

## Deleting a Node Group

+

+Ensure no workloads are scheduled on the node group before deleting. Workloads will be disrupted if their node group is removed.

+

+

To remove a custom node group:

-1. First, migrate any workloads running on the node group to another node group

+1. Migrate any workloads running on the node group to another node group

2. Navigate to **Infrastructure** → **Cluster**

3. Find the node group you want to delete

4. Click the delete icon and confirm

-

-

- Ensure no workloads are scheduled on the node group before deleting. Workloads will be disrupted if their node group is removed.

-

diff --git a/cloud-accounts/overview.mdx b/cloud-accounts/overview.mdx

index a9e0808..5e6eda5 100644

--- a/cloud-accounts/overview.mdx

+++ b/cloud-accounts/overview.mdx

@@ -1,56 +1,110 @@

---

-title: "Provisioning Infrastructure"

+title: "Cloud Accounts"

sidebarTitle: "Overview"

+description: "Connect your cloud provider and provision infrastructure with Porter"

---

-Porter provisions and manages the underlying infrastructure in your own cloud. During project creation, Porter will prompt you to provision resources in a cloud provider of your choice.

+Porter provisions and manages infrastructure directly in your own cloud account. This gives you full control over your data and resources while Porter handles the complexity of Kubernetes cluster management.

-For step by step instructions on how to provision infrastructure in each cloud provider, see the link below.

+## Supported Cloud Providers

-

-

-

+

+

+ Amazon Web Services

+

+

+ Google Cloud Platform

+

+

+ Microsoft Azure

+

+

-## FAQ[](#faq "Direct link to heading")

+## Getting Started

-### How much does the underlying infrastructure cost?[](#how-much-does-the-underlying-infrastructure-cost "Direct link to heading")

+Setting up Porter with your cloud account involves three steps:

-The cost of your infrastructure varies entirely on the amount of resources you use. By default, clusters provisioned by Porter cost around $200 per month. **All of the underlying infrastructure cost can be covered with your cloud credits from AWS, Google Cloud, and Azure.**

+

+

+ Provide Porter with credentials to access your cloud provider. Porter uses secure methods like IAM role assumption (AWS), service principals (Azure), or service accounts (GCP) to manage resources without storing static credentials.

-### What does Porter-provisioned infrastructure consist of?

+ [Connect a cloud account →](/cloud-accounts/connecting-a-cloud-account)

+

+

+ Porter provisions a Kubernetes cluster with sensible defaults including networking, load balancers, and node groups. Provisioning takes approximately 30-45 minutes.

-Infrastructure provisioned by Porter on AWS includes a VPC, a Network Load Balancer, and an EKS cluster. On GCP and Azure, the architecture is identical with equivalent components on each cloud provider (VPC/VNet, Load Balancer, and managed Kubernetes cluster).

+ [Create a cluster →](/cloud-accounts/creating-a-cluster)

+

+

+ Once your cluster is ready, deploy applications from GitHub or a container registry.

-#### Default Node Group Configuration

+ [Deploy your first app →](/getting-started/quickstart#step-4-create-your-first-application)

+

+

-By default, Porter provisions three node groups in your cluster:

+---

+

+## What Porter Provisions

+

+Infrastructure provisioned by Porter includes:

+

+| Component | AWS | Azure | GCP |

+|-----------|-----|-------|-----|

+| **Virtual Network** | VPC | VNet | VPC |

+| **Load Balancer** | Network Load Balancer | Azure Load Balancer | Cloud Load Balancer |

+| **Kubernetes Cluster** | EKS | AKS | GKE |

+| **Container Registry** | ECR | ACR | Artifact Registry |

+

+### Default Node Groups

+

+Porter provisions three node groups by default:

-| Node Group | Purpose | AWS Default | Azure Default | GCP Default |

-|------------|---------|-------------|---------------|-------------|

-| **System** | Kubernetes system workloads | 2x t3.medium | 2x Standard_B2s | 2x e2-medium |

-| **Monitoring** | Observability stack (metrics, logs) | 1x t3.large | 1x Standard_B2ms | 1x e2-standard-2 |

-| **Application** | Your application workloads | 1x t3.medium (autoscales) | 1x Standard_B2s (autoscales) | 1x e2-medium (autoscales) |

+| Node Group | Purpose | AWS | Azure | GCP |

+|------------|---------|-----|-------|-----|

+| **System** | Kubernetes system workloads | 2× t3.medium | 2× Standard_B2s | 2× e2-medium |

+| **Monitoring** | Observability stack | 1× t3.large | 1× Standard_B2ms | 1× e2-standard-2 |

+| **Application** | Your workloads | 1× t3.medium (autoscales) | 1× Standard_B2s (autoscales) | 1× e2-medium (autoscales) |

-**Application node group details:**

-- **Initial size:** 1 node

-- **Autoscaling:** Enabled by default

-- **Maximum nodes:** Scales based on workload demand and configured limits

-- **Minimum nodes:** 1 node to ensure availability

+

+On AWS, the application node group uses [cost optimization](/cloud-accounts/node-groups#creating-a-custom-node-group) by default, which automatically selects the most cost-effective instance types for your workloads.

+

-**Disk size:** Each node includes 50GB of disk storage by default across all cloud providers.

+The application node group autoscales based on workload demand. All nodes include 50GB of disk storage by default.

- You can customize machine types, node counts, and disk sizes after initial provisioning through the Infrastructure tab in the Porter dashboard.

+You can customize machine types, node counts, and disk sizes after initial provisioning through the [Node Groups](/cloud-accounts/node-groups) settings.

+

+---

+

+## FAQ

+

+### How much does the underlying infrastructure cost?

+

+The cost varies based on resource usage. By default, clusters provisioned by Porter cost approximately:

+

+| Provider | Estimated Monthly Cost |

+|----------|----------------------|

+| AWS | ~$225/month |

+| GCP | ~$253/month |

+| Azure | ~$165/month |

+

+These estimates are for the default cluster configuration. Actual costs vary based on usage, region, and customizations. **All infrastructure costs can be covered with cloud credits from AWS, Google Cloud, or Azure.**

+

+### Can I use my existing cloud credits?

+

+Yes. Porter provisions infrastructure directly in your cloud account, so any credits you have with AWS, GCP, or Azure apply to the resources Porter creates.

+

+### What permissions does Porter need?

+

+Porter requires permissions to create and manage Kubernetes clusters, networking resources, and container registries. The setup process varies by provider:

+

+- **AWS**: Automatic setup — Porter guides you through creating a CloudFormation stack that provisions the required IAM role with one click

+- **Azure**: Manual setup required — you'll need to create a service principal using our setup script or the Azure CLI

+- **GCP**: Manual setup required — you'll need to create a service account and download a JSON key file

+

+For detailed permission requirements and setup instructions, see [Connecting a Cloud Account](/cloud-accounts/connecting-a-cloud-account).

+

+### Can I revoke Porter's access?

+

+Yes. You can revoke Porter's access at any time by deleting the IAM role (AWS), service principal (Azure), or service account (GCP). Note that Porter will no longer be able to manage or delete resources after access is revoked.

diff --git a/cloud-accounts/provisioning-on-aws.mdx b/cloud-accounts/provisioning-on-aws.mdx

deleted file mode 100644

index a9c9795..0000000

--- a/cloud-accounts/provisioning-on-aws.mdx

+++ /dev/null

@@ -1,120 +0,0 @@

----

-title: "Provisioning on AWS"

----

-

-## Quick Installation[](#quick-installation "Direct link to heading")

-

-Porter provisions infrastructure in your own AWS account through the `AssumeRole` [operation](https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use.html).

-Simply put, Porter can _assume_ the role that you create in your own AWS account as long as you declare that you trust Porter to do so.

-This eliminates the need to rely on static AWS credentials and makes it easier to revoke access for security purposes. Porter creates a CloudFormation to manage this role in your account using 3 simple steps.

-

-

- The IAM role Porter creates must be kept in your AWS account for Porter to

- keep managing your infrastructure. Deleting this role will cause Porter to

- fail and stop managing your infrastructure.{" "}

-

-

-1. After selecting AWS as your cloud provider you will be prompted to login to your AWS account. Upon successful login you will be prompted to provide the AWS Account ID of the account in which you wish to provision your cluster in.

- You can find your Account ID at the top right corner of your [AWS console](https://console.aws.amazon.com).

- Input your Account ID and click on **Grant Permissions**.

-

-2. You will be directed to your AWS console to create a CloudFormation stack. If this window did not appear, please ensure that your browser has not blocked the pop-up window. This stack will create the role Porter will assume to manage your infrastructure.

- Scroll to the bottom of the screen. Check the "I acknowledge..." box and click on **Create Stack**. It may take a few minutes for the role to be fully created. After creation you can proceed to selecting provisioning settings.

-

- {" "}

-

- If you want to use Porter with less expansive permissions than what is

- provisioned by the CloudFormation stack, please contact us through the support widget to inquire about Porter

- Enterprise. Porter Enterprise requires minimum IAM permissions that are

- smaller in scope.{" "}

-

-

-4. On the next page, you will be prompted to select the AWS region that you want to provision your infrastructure in.

- Porter supports all AWS regions.

-

-

- For guidance on choosing a region, if you have an external database to

- Porter, we recommend choosing a region close to your database. Otherwise,

- consider choosing a region near your primary customer base.

-

-

-5. If there are any quota limits on your account imposed by AWS, Porter will request them on your behalf by default. Alternatively, if you wish to manually request quota increases,

-follow the links prompting you to the AWS dashboard. If you go the manual route, you will not be able to provision your cluster until these quota increase requests have been approved

-

-6. Once you click **Provision**, Porter will start spinning up the requisite infrastructure in your account. This may take up to 30 minutes.

-

-## Troubleshooting Initial Provisioning[](#troubleshooting-initial-provisioning "Direct link to heading")

-

-### Provisioning takes longer than 30 minutes

-

-If your cluster has been provisioning for more than 45 minutes, there may be an issue. Check the following:

-

-- Verify that the IAM role still exists in your AWS account and hasn't been deleted

-- Check your AWS Service Quotas to ensure they were approved

-- Verify that the selected region supports the requested instance types

-

-If issues persist, contact us through the dashboard chat bot with your project ID.

-

-### Permission errors during provisioning

-

-If you encounter permission errors:

-

-## Troubleshooting Initial Provisioning[](#troubleshooting-initial-provisioning "Direct link to heading")

-

-### Provisioning takes longer than 30 minutes

-

-If your cluster has been provisioning for more than 30 minutes, there may be an issue. Check the following:

-

-- Verify that the IAM role still exists in your AWS account and hasn't been deleted

-- Check your AWS Service Quotas to ensure they were approved

-- Verify that the selected region supports the requested instance types

-

-If issues persist, contact us through the dashboard chat bot with your project ID.

-

-### Permission errors during provisioning

-

-If you encounter permission errors:

-

-- Verify the CloudFormation stack created successfully and the role exists

-- Ensure the role has not been modified after creation

-- Check that your AWS account has not applied SCPs (Service Control Policies) that restrict Porter's actions

-

-### Quota increase requests not approved

-

-AWS typically approves quota increases automatically, but some may require manual review:

-

-- Check the status of quota requests in the [AWS Service Quotas console](https://console.aws.amazon.com/servicequotas/)

-- Requests under manual review typically take 24-48 hours

-- If urgent, you can contact AWS support to expedite the review

-

-### CloudFormation stack creation fails

-

-If the CloudFormation stack fails to create:

-

-- Ensure you have sufficient permissions in your AWS account to create IAM roles

-- Check that you're logged into the correct AWS account (the account ID should match)

-- Verify your account is in good standing and billing is enabled

-

-## Deleting Provisioned Resources[](#deleting-provisioned-resources "Direct link to heading")

-

-

- For Porter to delete your resources, it needs the IAM role that was initially

- created during provisioning. Please make sure to delete the resources first,

- and then delete the IAM role after. If you've already deleted the IAM role,

- you will have to delete the resources [directly from the AWS

- console.](/other/deleting-dangling-resources)

-

-

-To delete resources that have been provisioned by Porter, click on the Infrastructure tab on the sidebar of the dashboard. There you can find the **Delete Cluster** button.

-

-Once the cluster has been deleted, navigate to **CloudFormation Stacks** in your AWS console to delete the role that was created during initial provisioning. This will revoke Porter's access to your account.

-Select the stack named `PorterRole` and click **Delete**.

-

-

- Deleting resources on AWS via Porter may result in dangling resources. After

- clicking delete, please make sure to check your AWS console to see if all

- resources have properly been removed. You can remove any dangling resources

- via either the AWS console or the CLI.

-

-

-For a guide on how to delete the dangling resources, see [Deleting Dangling Resources](/other/deleting-dangling-resources).

diff --git a/cloud-accounts/provisioning-on-azure.mdx b/cloud-accounts/provisioning-on-azure.mdx

deleted file mode 100644

index bb1cabf..0000000

--- a/cloud-accounts/provisioning-on-azure.mdx

+++ /dev/null

@@ -1,231 +0,0 @@

----

-title: "Provisioning on Azure"

----

-

-## Setup Options

-

-You can set up Azure for Porter in two ways:

-

-- **[Option 1: Automated Setup Script (Recommended)](#option-1-automated-setup-script-recommended)** - One-click setup using our script

-- **[Option 2: Manual Setup](#option-2-manual-setup)** - Step-by-step manual configuration

-

-### Option 1: Automated Setup Script (Recommended)

-

-**Prerequisites for the script:**

-

-- Azure CLI installed and authenticated (`az login`)

-- `jq` command-line JSON processor installed

-- Admin permissions on your Azure subscription (for granting API permissions)

-

-Download and run our automated setup script that handles all the configuration steps for you:

-

-```bash

-# Download the setup script

-curl -O https://raw.githubusercontent.com/porter-dev/docs/main/scripts/setup-azure-porter.sh

-

-# Make it executable

-chmod +x setup-azure-porter.sh

-

-# Run the script (optionally provide subscription ID)

-./setup-azure-porter.sh [your-subscription-id]

-```

-

-The script will:

-

-- ✅ Enable all required Azure resource providers

-- ✅ Create the custom `porter-aks-restricted` role

-- ✅ Create the service principal with proper permissions

-- ✅ Add Microsoft Graph API permissions

-- ✅ Grant admin consent (if you have permissions)

-- ✅ Display the credentials needed for Porter

-

-**After running the script:**

-

-1. Copy the displayed credentials to the Porter dashboard when creating your project

-2. Request quota increases if needed (see [Compute Quotas](#quotas) section below)

-3. Proceed with cluster provisioning in Porter

-

-

- If the script fails to grant admin consent automatically, you can grant it

- manually in the Azure Portal: **App registrations** >

- **azure-porter-restricted-sp** > **API permissions** > **Grant admin consent

- for Default Directory**.

-

-

----

-

-If you prefer to set up manually or want to understand each step, continue with **Option 2** below.

-

----

-

-### Option 2: Manual Setup

-

-#### Prerequisites[](#prerequisites "Direct link to heading")

-

-To provision through Porter, you must enable certain Azure resource providers for your subscription.

-

-1. In the Azure portal, search for **Subscriptions**, select the subscription you would like to use to provision, and click the **Resource providers** tab in the subscription console.

-

-

-

-2. Enable the following providers by selecting the providers and clicking **Register**:

-

-- Microsoft.Capacity

-- Microsoft.Compute

-- Microsoft.ContainerRegistry

-- Microsoft.ContainerService

-- Microsoft.ManagedIdentity

-- Microsoft.Network

-- Microsoft.OperationalInsights

-- Microsoft.OperationsManagement

-- Microsoft.ResourceGraph

-- Microsoft.Resources

-- Microsoft.Storage

-

-It might take a few minutes for providers to complete registration. Once you confirm that all resource providers are enabled, proceed to the next section.

-

-#### Creating the Service Principal[](#creating-service-principal "Direct link to heading")

-

-1. Create a new role with the Azure CLI

-

-

- The following commands can be run in the Azure Cloud Shell (selecting the

- `Bash` option) or in your local terminal after installing the Azure CLI and

- authenticating with `az login`.

-

-

-Most of the permissions required by Porter to manage your infrastructure come with Azure's built-in Contributor role. However, this role does not allow for role assignments, which are crucial for Porter.

-

-For this reason, we need to create a new role that combines the Contributor scope along with permissions to create role assignments. We will do this through the Azure CLI.

-

-To get started, set the `PORTER_AZURE_SUBSCRIPTION_ID` environment variable to your subscription id:

-

-```

-PORTER_AZURE_SUBSCRIPTION_ID=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

-```

-

-Run the following command to create the role:

-

-```

-envsubst << EOF | az role definition create --role-definition @-

-{

- "assignableScopes": ["/subscriptions/${PORTER_AZURE_SUBSCRIPTION_ID}"],

- "description": "Grants Porter access to manage resources for an AKS cluster.",

- "id": "/subscriptions/${PORTER_AZURE_SUBSCRIPTION_ID}/providers/Microsoft.Authorization/roleDefinitions/porter-aks-restricted",

- "isCustom": true,

- "name": "porter-aks-restricted",

- "permissions": [

- {

- "actions": ["*"],

- "dataActions": [],

- "notActions": [

- "Microsoft.Authorization/elevateAccess/Action",

- "Microsoft.Blueprint/blueprintAssignments/write",

- "Microsoft.Blueprint/blueprintAssignments/delete",

- "Microsoft.Compute/galleries/share/action"

- ],

- "notDataActions": []

- }

- ],

- "roleName": "Contributor",

- "roleType": "BuiltInRole",

- "type": "Microsoft.Authorization/roleDefinitions"

-}

-EOF

-```

-

-2. Create a new service principal through the Azure CLI that uses the role you just created:

-

-```

-az ad sp create-for-rbac \

---name="azure-porter-restricted-sp" \

---role="porter-aks-restricted" \

---scopes="/subscriptions/${PORTER_AZURE_SUBSCRIPTION_ID}"

-```

-

-Running this will display the following output, which you will need when you go to provision your cluster on the Porter dashboard:

-

-```

-{

- "appId": "00000000-0000-0000-0000-000000000000",

- "displayName": "azure-porter-restricted-sp",

- "name": "azure-porter-restricted-sp",

- "password": "0000-0000-0000-0000-000000000000",

- "tenant": "00000000-0000-0000-0000-000000000000"

-}

-```

-

-3. Grant API permissions to your service principal

-

-In your Azure portal, search for **App registrations**. Under the **All applications** tab, you should see the newly-created service principal. Select the principal and navigate to the API Permissions tab:

-

-Select **Add a permission** > **Microsoft Graph** > **Application permissions** and select the following seven permissions:

-

-- Application.ReadWrite.All

-- Directory.ReadWrite.All

-- Domain.Read.All

-- Group.Create

-- Group.ReadWrite.All

-- RoleManagement.ReadWrite.Directory

-- User.ReadWrite.All

-

-Click **Add permissions** to save these permissions, and then click **Grant admin consent for Default Directory** to grant these permissions to your service principal.

-

-#### Compute Quotas [](#quotas "Direct link to heading")

-

-By default, Azure limits the types of resources you can provision in your subscription. To provision a Porter cluster, you will need to request a quota increase for the compute resources you plan to use.

-

-In your Azure portal, navigate to your subscription and select **Usage + quotas**. Set the resource filter to **Compute** and region to your desired region.

-

-While the exact virtual machines provisioned by Porter will depend on your selected region's availability, the following table lists the default virtual machine types that Porter will provision along with recommended initial quota limits:

-

-| Resource Family | Recommended Quota |

-| --------------------------- | ----------------- |

-| Total Regional vCPUs | 40 |

-| Standard Basv2 Family vCPUs | 40 |

-

-After selecting each resource family, click **Request quota increase** and input your desired quota limit. Requests should be approved automatically within a few minutes. If your request is not approved automatically, fill out the support ticket as prompted. Approval is typically granted in a few hours.

-

-## Provisioning Your Porter Cluster[](#provisioning-porter-cluster "Direct link to heading")

-

-1. Once you create your project and select Azure as your cloud provider, you will be prompted to provide the credentials for the service principal you created earlier.

-2. After providing your credentials, hit "Continue". In the case that you do not have cloud credits, we have provided a breakdown of the monthly costs on Azure.

-3. On the next page, you will be able to configure your Azure cluster. For choosing an Azure tier, we recommend choosing a Free tier for non-production workloads, and the Standard tier for production workloads.

- This is something you are able to change after creating your cluster, should your needs change. You will also be prompted to select a region, and have the option to cutomize your machine type. In the case you

- don't have specific preferences, the default is a safe start. This is also something you will be able to update once your cluster has been provisioned.

-

- For guidance on choosing a region, if you have an external database to

- Porter, we recommend choosing a region close to your database. Otherwise,

- consider choosing a region near your primary customer base.

-

-4. Once you click **Provision**, Porter will start spinning up the requisite infrastructure in your account. This may take up to 30 minutes.

-

-## Rotating Service Principal Credentials[](#-rotating-service-principal-credentials "Direct link to heading")

-

-Azure mandates that client secrets for Service Principals(the `password` field displayed when you create a Service Principal) expire every 365 days. When a client secret expires, Porter loses the ability to manage your infrastructure or push new deployments. Note that in the event of a client secret's expiration, _your cluster continues to function normally, and existing workloads are not affected_.

-

-To refresh your client secret:

-

-1. Visit [https://aka.ms/NewClientSecret](https://aka.ms/NewClientSecret) and select the app ID for the service principal that was used to create your cluster(to check what your app ID is, you can navigate to `Integrations` on the Porter dashboard and select `Azure`).

-2. Generate a fresh client secret, and copy new value.

-3. Navigate to `Integrations` on the Porter dashboard and select `Azure`.

-4. Update the value of the `Password` field with the new value you generated on Azure, and hit `Update`.

-

-## Deleting Provisioned Resources[](#deleting-provisioned-resources "Direct link to heading")

-

-

- Deleting resources on Azure via Porter may result in dangling resources. After

- clicking delete, please make sure to check your Azure portal to see if all

- resources have properly been removed. You can remove any dangling resources

- via either the Azure console or the Azure CLI.

-

-

-We recommend that you delete all provisioned resources through Porter as well as confirm resources have been deleted from the Azure portal. This will ensure that you do not get charged on Azure for lingering resources.

-

-To delete resources, click on **Additional settings** from the **Infrastructure** tab.

-

-Click **Delete Cluster** to remove the cluster from Porter and delete resources in your Azure console. It may take up to 30 minutes for these resources to be deleted from your Azure subscription.

-

-To confirm that resources have been deleted, navigate to your Azure portal and search for **Resource groups**. You should expect to see a resource group named `-` containing an Azure container registry with your application build images. By default, Porter will not delete your build images, so you will need to delete this resource group manually.

-

-No other resource groups should be present. If any are, you should delete them manually by clicking on the resource group and selecting **Delete resource group**.

diff --git a/cloud-accounts/provisioning-on-gcp.mdx b/cloud-accounts/provisioning-on-gcp.mdx

deleted file mode 100644

index d93c411..0000000

--- a/cloud-accounts/provisioning-on-gcp.mdx

+++ /dev/null

@@ -1,87 +0,0 @@

----

-title: "Provisioning on Google Cloud"

----

-

-Porter runs on a Kubernetes cluster in your own Google Cloud account. You can provision a cluster through Porter by providing the credentials of a GCP service account.

-

-

- Quick Installation uses **Owner** permissions to set up Porter. You can

- optionally specify the minimum IAM policies for provisioning both a cluster

- and registry.

-

-

-## Prerequisites[](#prerequisites "Direct link to heading")

-

-### Enabling GCP APIs[](#enabling-apis "Direct link to heading")

-

-To use Porter on GCP, you must first enable some APIs on your project.

-

-1. Navigate to the **APIs & Services** tab of your project.

-

-

-

-2. Click on the **Enable APIs and Services** button at the top. This will bring up a catalog of APIs that you can enable on GCP. Enable the following five APIs:

-

-- Compute Engine API

-- Kubernetes Engine API

-- Cloud Resource Manager API

-- Container Registry API

-- Artifact Registry API

-

-It might take a few minutes for each of these APIs to be enabled. Once you can confirm that all five APIs are enabled from the **APIs & Services** tab, proceed to the next section.

-

-### Creating a Service Account[](#creating-service-account "Direct link to heading")

-

-1. First, go to your [Google Cloud console](https://console.cloud.google.com/) and navigate to **IAM & Admin** \-> **Service Accounts**:

-

-

-

-2. Select **Create Service Account**:

-

-

-

-3. After naming your service account, grant the service account these five permissions:

-

-- **Cloud Storage > Storage Admin**

-- **Compute Engine > Compute Admin**

-- **Kubernetes Engine > Kubernetes Engine Admin**

-- **Service Accounts > Service Account User**

-- **Artifact Registry > Artifact Registry Administrator**

-

-Select **Done** to create the service account.

-

-

-

-4. Once the service account has been created, under **Actions** select **Manage keys**.

-

-

-

-5. Select **ADD KEY** \-> **Create new key** and then choose **JSON** as your key type. After creation, your JSON key will automatically be downloaded as a file. You will need this key later.

-

-

-

-## Provisioning a cluster[](#provisioning-cluster "Direct link to heading")

-

-1. Select "GCP" from the cloud providers:

-

-2. Click "Drop a GCP Service Account JSON here, or click to browse." and upload the JSON key file from the Prerequisites step, then click "Continue"

-

-3. Select the region that you would like to provision in, then click "Provision"

-

-4. Before you are able to provision a cluster you must pass all provision checks. Troubleshooting steps will be shown on the dashboard if you encounter these errors.

-5. Once you click **Provision**, Porter will start spinning up the requisite infrastructure in your account. This may take up to 30 minutes.

-

-## Deleting Provisioned Resources[](#deleting-provisioned-resources "Direct link to heading")

-

-

- Deleting resources on GCP via Porter may result in dangling resources. After

- clicking delete, please make sure to check your GCP console to see if all

- resources have properly been removed. You can remove any dangling resources

- via either the GCP console or the gcloud CLI.

-

-

-We recommend that you delete all provisioned resources through Porter as well as confirm resources have been deleted from the GCP console. This will ensure that you do not get charged on GCP for lingering resources.

-

-To delete resources, click on **Additional Settings** from the **Infrastructure** tab.

-

-Click **Delete Cluster** to remove the cluster from Porter and delete resources in your GCP console. It may take up to 30 minutes for these resources to be deleted from your GCP console.

diff --git a/cloud-accounts/tailscale.mdx b/cloud-accounts/tailscale.mdx

new file mode 100644

index 0000000..7903dfe

--- /dev/null

+++ b/cloud-accounts/tailscale.mdx

@@ -0,0 +1,120 @@

+---

+title: "Tailscale VPN"

+sidebarTitle: "Tailscale VPN"

+description: "Configure VPN access for your cluster using Tailscale"

+---

+

+Tailscale is a VPN that creates a secure network between your servers, computers, and cloud instances. Porter integrates with Tailscale to provide secure access to your cluster resources over a private network (Tailnet).

+

+To learn more about how Tailscale works under the hood, check out this [overview on their official blog](https://tailscale.com/blog/how-tailscale-works/).

+

+## Setting up Tailscale

+

+### Step 1: Create an OAuth Client in Tailscale

+

+

+

+ In the Tailscale admin dashboard, navigate to the **Access controls** tab and add the following to your policy file:

+

+ ```json

+ "tagOwners": {

+ "tag:k8s-operator": [],

+ "tag:k8s": ["tag:k8s-operator"],

+ },

+ ```

+

+

+

+ Click **Save** to save the changes.

+

+